The first vision and naming of the metaverse originated in the science fiction novel Snow Crash by Neal Stephenson in 1992. In it, the metaverse was a shared muliplayer online game made available over the world’s fibre optics network and projected onto virtual reality goggles.

Users could control avatars that could interact with other avatars and computer- controlled agents. An avatar in that metaverse could gain status through its technical acumen navigating the arena and gaining access to exclusive spaces. Ready Player One by Ernest Cline in 2011 and adapted into a film in 2018, followed on with this concept in a future view of the world in 2045 where users escaped the real world by entering a metaverse called Oasis accessed with a VR headset and wired gloves.

Multiplayer games and ‘Avatars’

The use of Avatars has extended even further back from these novels. In the early 1970s, Steve Colley and Howard Palmer invested in a multiplayer game called MazeWar that could be played over ARPANET, a precursor to the internet. The game’s first avatar had a graphical eyeball that moved through the maze pointing in the direction it was travelling to shoot other players. In the 1980s, the Commodore 64 computer had a virtual world Habitat with cartoon-like avatars that could walk around and communicate with chat bubbles. As the internet ramped by 1994, WorldsChat created a space-station-themed virtual space for avatars to have social interaction and explore the various rooms. Out of that effort, a more advanced programme, Alpha-World, featured 700 themed rooms or Active Worlds, with 12 different avatars, more interaction with the game and reaching 250,00o cumulative users. Other services, including Worlds Away, Virtual Places, Comic Chat and The Palace, also offered these virtual rooms.

Communities and Services

In 2000, Finnish Company Sulake created Habbo (formerly Habbo Hotel), an online community that has accumulated 316 mn avatars since launch and now has 800,000 active users. The main feature in the game is a hotel where users can visit public areas (restaurants, cinemas and clubs) and create guest rooms. The users in this community can create a character, design and build rooms, chat with other players and take care of virtual pets.

The early services formed the building blocks for Second Life, a virtual online world that launched in 2003 from Linden Lab. By 2013, the company said that it had 36 mn accounts created, with 1 mn monthly active users who had spent 217,000 cumulative years online on territory comprising over 700 square miles and spending US$3.2 bn on in-world transactions.

The users in Second Life created avatars to interact with places, objects and other avatars through chat, IM or voice. The avatars could take any form or resemble their real-life form and could travel by walking, vehicle, flying or teleporting. The community allowed a variety of socialising, games, group activities, and opportunities to build, shop, create and trade property and services. The service also used a virtual currency called the Linden dollar to buy, sell, rent or trade goods and services. The goods could include buildings, vehicles, clothes, art or jewellery, and services could include entertainment, custom content, or business management.

"The community allowed a variety of socialising, games, group activities, and opportunities to build, shop, create and trade property and services"

Second Life went into decline as it was usurped by other social media platforms and did not adapt well to a mobile platform. Second Life’s chief architect, Philip Rosedale, in an iEEE Spectrum interview in November 2021 noted limitations with adults wanting to socialise with strangers online, technical challenges getting more than 100 people together in a copy of a concert space, a need for better toolkits for large numbers of people to build the experiences and content, and a better common currency that can unify the diverse tokens that each platform uses. He also views VR still having issues to solve around comfort, typing speed and communicating comfortably with others.

A reporter from Reuters in a series published from 2007-08 noted issues including limited support to new joiners to make the most of the platform, an overly complicated user interface, IT issues (crashes and unstable IM), and a high weighting towards adult content. They still did note an incredible depth, passion and camaraderie in the community and some interest in being able to buy a grid of space and mould it into something.

The Second Life site still claims 750,000 monthly active users on the platform and US$650 mn in annual transactions, though this is marginal relative to the major social media platforms and never ramped much above 1 mn people. Linden Lab created a follow-up VR-based virtual world called Project Sansar but it did not ramp up well so returned to focussing on Second Life and sold the Project Sansar to Wookey Project Group. That group is now focussing on virtual concerts including pre/after parties.

| METAVERSE: A STILL-EVOLVING CONCEPT

The metaverse has seen a recent substantial increase in awareness in past months with Facebooks renaming of the company as Meta in October 2021 and a focus on driving all of its efforts towards building out the metaverse including most recent renaming its Oculus Quest VR glasses as Meta Quest. While the renaming of Facebook did bring increased attention to the concept, there isn’t really an agreed definition as to what the metaverse is or as to how it will evolve. In this section, we outline how various key technology companies are viewing and defining this opportunity along with providing frameworks from two industry experts/ commentators.

CS Summary view of the Metaverse

While industry participants hold various views as to what constitutes a metaverse, in our view it essentially boils down to an evolution into a more immersive 3D internet with upgrades along five key vectors (and different commentators’ sub-divisions of these vectors):

● Devices/Hardware.

The key interface between the user (humans) and the metaverse. These could be smartphones (which evolve and add functionality over the coming years) and/or could be dedicated or linked AR/VR devices or could be a completely new dedicated hardware.

● Infrastructure.

The network and devices that connect the hardware device to the content-5G networks, Wifi, edge computing implementations and eventually 6G.

● Content.

All the various types of software and content including gaming.

● Community.

All the various use cases with many (theoretically unlimited) individuals/users who interact and socialise within the platform and also across applications/platforms (use cases).

● Currency/settlement.

The method used to “settle” transactions for participation, content creation or direct commerce.

In the following section, we further dig into the definition of and the key concepts around building out the metaverse from various leading companies in the technology world as well as views/definitions of the concept from some prominent industry commentators.

| META’S VIEW: METAVERSE AS AN EMBODIED INTERNET

Metaverse comes from the Greek word “Beyond” and is about creating a next generation of the internet beyond the constraints of screens and physics. The metaverse is expected to be the next platform for the internet with the medium even more immersive, an embodied internet where people are in the experience, not just looking at it. Users will be able to do almost anything they can imagine: get together with friends and family, work, learn, play, shop and create, with entirely new categories not available on phones/computers today.

The metaverse will be the successor to the mobile internet, enabling people to be able to feel present and express them-selves in new immersive ways. That presence should allow people to feel they are together even if they are apart, whether in a chat with family or playing games and feeling like they are playing together in a different world of that game and conducting meetings as if face to face. The embodied internet would mean, instead of looking at a screen, users would feel they are in a more natural and vivid experience while connecting socially, and during entertainment, games and work, by providing a deep feeling of presence.

Meta views several foundational concepts required for the metaverse:

● Presence

The defining quality of the metaverse—this should enable the ability to see peoplds facial expressions and body language, and feel in the moment by being more immersed.

"It discussed its view that in gaming, the metaverse would be a collection of communities with individual identities anchored in strong content franchises accessible on every device"

● Avatars

Avatars will be how people represent themselves rather than a static profile picture. The codec avatar is a 36o-degree photorealistic avatar that can transform the profile image to a 3D representation with expressions, and realistic gestures that can make interactions richer. The avatar could have a realistic mode but also a mode used for work, socialising, gaming and clothing designed by creators that can be taken across different applications.

● Home space

The home space can recreate parts of the physical home virtually, add new parts virtually and add in customised views. The home space can store pictures, videos and purchased digital goods, have people over for games and socialising, and a home office to work.

● Teleporting

A user can teleport anywhere around the metaverse to any space just like clicking a link on the internet.

● Interoperability

Interoperability would allow someone to buy or create something that is not locked into one platform and can be owned by the individual rather than the platform. Meta is building an API (Application Programming Interface) to allow users to take their avatar and digital items across different apps and experiences. The interoperability would require ecosystem building, norm setting and new governance.

● Privacy and safety

The metaverse needs to build in privacy, safety, interoperability and open standards from the start, with features allowing a user choice in who they are with, the ability to be private or to block another user. The metaverse needs easy-to-use safety controls and parental controls, and also to take out the element of unexpected surprises.

● Virtual goods

The metaverse would allow the ability to bring items into the metaverse or project those into the physical world. A user can bring any type of media represented digitally (photos, videos, art, music, movies, books and games) into the metaverse. These items can also be projected into the physical world as holograms or AR objects too. Street art could be sent over and paid for. Clothing can also be created that is accurate, realistic and textured, and that can be purchased and bought.

● Natural interfaces

All kinds of devices will be supported. The metaverse will have the ability to be used on all types of devices ranging from using virtual reality glasses for full immersion to AR to still be present in the physical world, or through the use of a computer or phone to quickly jump in from existing platforms. Interaction and input can be through typing or tapping, gestures, voice recognition or even thinking about an action. In a future world, the user would not even need a physical screen as they could view a hologram for the images throughout the virtual world.

| MICROSOFT’S VIEW: BRINGING PEOPLE TOGETHER & FOSTERING COLLABORATION

Microsoft defined the metaverse as a persistent digital world inhabited by a digital representative of people, places and things. The metaverse can be thought of as a new version of the internet where people can interact as they do in the physical world, and gather to communicate, collaborate and share with personal virtual presence on any device.

The company views the metaverse as no longer a vision, citing already existing-use cases such as the ability to go to a concert and shows with other real people inside a video game, the ability to walk a factory floor from home or to join a meeting remotely but be in the room remotely to collaborate with other workers.

The company believes the metaverse has the ability to stretch us beyond the barriers and limitations of the physical world, which proved to be a larger barrier when COVID-19 prevented work from the office or travelling to visit clients, friends and family. Microsoft is working on tools to help individuals represent our physical selves better in the digital space and bring that humanity with the person into the virtual world. Some of the capabilities it is enabling is about teammates joining meetings from everywhere and real-time translation allowing people from different cultures to collaborate in real time.

With the announced intention to acquire leading game developer Activision and its earlier acquisition of Minecraft, the company is driving big investment into virtual connectivity in gaming. It discussed its view that in gaming, the metaverse would be a collection of communities with individual identities anchored in strong content franchises accessible on every device. Activision would help it pull together the resources to create virtual worlds filled with both professionally produced and user-generated content for rich social connections.

The company also views its Azure offerings for business as well suited for the metaverse as noted from Satya Nadella at the May 2021 Build Developer Conference: (1) with Azure Digital Twins, users can model any asset or place; (2) with Azure loT, the digital twin can be kept live and up-to-date; (3) Synapse tracks the history of digital twins and finds insights to predict future states; (4)* Azure* allows its customers to build autonomous systems that continually learn and improve; (5) Power Platform enables domain experts to expand on and interact with digital twin data using low-code/no-code solutions; and (6) Mesh and Hololens bring real-time collaboration.

| GOOGLE: DEVELOPMENT OF AR/VR COULD REBOOT WITH ITS AMBIENT COMPUTING PUSH

Google has been an early visionary for mixed reality products, having introduced its Google Glass for developers in 2013 and smartphone-driven VR systems in the form of Google card-board in 2014 and Daydream headset in 2016. The company has a goal of driving ambient computing which means users can access its services from wherever they are, and they become as reliable and essential as running water. The company’s hardware strategy around smartphones/tablets, Nest home devices and potential re-emergence into AR/VR are tied to this ambient compute experience.

For Google Glass, it launched in 2019 the Glass Enterprise Edition 2 featuring the Snapdragon 710 chipset, 3GB RAM, 64ox36o display, 8MP camera, three microphones, USB-C and a 82omAh quick-charge battery priced at US$999. The device was geared more to enterprise use for hands-free access to information and tools, and the ability to view instructions and send photos/videos of work to colleagues for collaboration.

Google is showing efforts towards progress in bringing a new set of AR/VR devices to market. The company spent US$18o mn to acquire North Inc., a Canadian company making smart glasses projected on an optical prescription lens including built-in mic, ring accessory for commands and is also the developer of Myo, a gesture band to recognise neuromuscular movements that could be useful for control functions in AR/ VR. North had previously acquired Intel’s smart glass patents in 2018.

The company recently hired former Oculus GM, Mark Lucovsky, who is now leading Google’s Operating System team and experiences delivered on top of the OS for AR. Google in its recruiting posts for AR hardware developers, hardware engineers and software developers indicated it is building the foundations for substantial immersive computing, and building helpful and delightful user experiences to make it accessible to the billions of people through mobile devices. The company also indicated it includes building software components that control and manage the hardware on its AR products.

| APPLE: DESIGNING ITS ECOSYSTEM ALREADY AROUND AR

Apple views AR transforming how people work, learn, play, shop and connect with the world, and the perfect way to visualise things that would be impossible or impractical to see otherwise. Apple claims it has the world’s largest AR platform, with hundreds of millions of AR-enabled devices and thousands of AR apps on its Apps Store. CS expects Apple to launch its first mixed reality device in late 2022 manufactured by Pegatron, though the initial projects are for small unit volumes (1-2 mn). The product may be the first stage to unleash more creativity among its develop community to move the AR from phone/tablet viewing to 3D mixed reality viewing.

Apple’s development kit is now on the fifth generation of its ARKit. This includes some of the key following features to en-able the development of AR applications:

● Hardware platform

Apple believes its cameras, displays, motion sensors, graphics, machine learning and developer tools can enable realistic AR experiences also built into its iOS, Safari, Mail, Messages and files.

● RealityKit 2

The RealityKit allows rendering, camera effects, animations and physics to enable AR. It features object capture that can turn pictures into 3D models, custom shaders to blend virtual content with the real world and a Swift API to create player controlled characters to explore the AR world. Reality Converter. Reality Converter can build, test, tune and simulate AR experiences for work on all AR-enabled iPhones/ iPads. It includes a built-in AR library, tools for composing AR, animations and audio for the AR objects.

● Object occlusion

By combining information from the LiDAR scanner and edge detection in RealityKit, virtual objects can interact with the physical surroundings in a realistic manner.

● Facetracking

Facetracking is supported by the front-facing and ultra-wide camera to track faces for snapchat and emoji camera experiences.

● Clips

AR spaces use LiDAR to sense depth to enhance the real-world environment with playful and immersive effects. An example use case is the Snapchat messenger with add-on AR effects.

● Location anchors

Location anchors can place objects at specific places in cities or landmarks that users can move around or see from different perspectives.

● ARki

ARki helps visualise 3D projects in AR to view, share and communicate designs. The ARki uses LiDAR and people occlusion technology to place and visualise objects at world scale for realism or as a miniature on a desk. An example is Warby Parker’s application to see how glasses appear on the face using the True Depth camera on the phone.

● AR Quick Look

The built-in iOS apps can use Quick Look to display files of virtual objects in 3D or AR on an iPhone. The Quick Look views in applications and websites can let users view object renderings in real-world surroundings with accompanying audio.

● DSLR Camera

The LiDAR scanner can capture depth so text and graphics can be moved behind people and objects in seconds. IKEA Place is one application that can leverage the Li-DAR scanner to demo room furnishings.

| NVIDIA: OMNIVERSE TO CREATE & CONNECT WORLDS WITHIN THE METAVERSE

Nvidia also defined the metaverse in its August 2021 blog as a shared virtual 3D world, or worlds, that are interactive, immersive and collaborative and as rich as the real world. It views it as going beyond the gaming platforms (Roblox, Minecraft and Fortnite) and video conferencing tools aimed at collaboration. The metaverse would become a platform that is not tied to any one app or any single digital or real place. The virtual places would be persistent, and the objects and identities moving through them can move from one virtual world to another or into the real world with AR.

Nvidia views its Omniverse as the “plumbing” on which metaverses can be built; a platform for connecting 3D worlds into a shared virtual universe. The platform is already used across industries for design collaboration and creating digital twin simulations of real-world buildings, factories, robots, self-driving cars and avatars. It sees this modelling of the natural world as a fundamental capability required for robotics. Omniverse users can create virtual worlds where robots powered by Al brains can undertake this training to learn from the real or digital environments. Once trained in the Omniverse, the capability can be loaded on an Nvidia Jetson processor and connected to a real robot or factory automation equipment. The Omniverse factory can also connect to ERP to simulate throughput, plant layouts or for factory dashboards.

Nvidia’s Omniverse has a few key elements:

● Omniverse Nucleus

Nucleus is a database engine to connect multiple users and enable the interchange of 3D assets and scene descriptions. Designers can collaborate to create a scene with modelling, layout, shading, animation, lighting, special effects and rendering using industry-standard applications such as Autodesk Maya, Adobe Photoshop and Epic Games Unreal Engine. The platform relies on universal scene description (USD), an interchange framework invented by Pixar in 2012 to provide a common language for defining, packaging, assembling and editing 3D data, similar to HTMLs use in web programming.

● Omniverse Composition, rendering and animation engine

The engine simulates the virtual world and incorporates physics, materials and lighting effects, and can be fully integrated with Nvidia’s Al frameworks. The engines are cloud native and can scale across multiple GPUs over any of the RTX platforms to stream to any device. With Omniverse, artists can see live updates made by other artists working in different applications and changes reflected in multiple tools at the same time.

● Nvidia Cloud XR

The Cloud XR is the client and server soft-ware to stream extended reality content from Open VR applications to iOS, Android and Windows devices. The CloudXR allows users to portal into and out of the Omniverse. The service allows users to teleport into Omniverse with VR or have digital objects teleport out with AR. Nvidia views devices such as Oculus Quest and Microsoft’s Hololens as steps toward fuller immersion, with Nvidia’s Omniverse as the high-fidelity simulation of the virtual world to feed the display.

● Omniverse Avatar

The technology platform connects Nvidia’s speech AI, computer vision, natural language understanding, recommendation engines and simulation to generate interactive Al avatars. The avatars created are able to see, speak and converse on a wide range of subjects to allow the creation of Al assistants that are customisable to different industries (ho-tel/restaurant reservations/checkins).

The Omniverse platform has been downloaded by over 70,000 creators and used by over 700 companies including BMW, Ericsson, Lockheed Martin and Sony Pictures. The platform is available through hardware makers such as Dell, HP, Lenovo and Supermicro along with major distributors with Omniverse Enterprise on a subscription model.

"The company’s view of the metaverse is a world infused with ‘reality channels’, where data, information, services and interactive creations can be overlaid on the real world”

| NIANTIC’S VIEW: METAVERSE DRIVEN BY AR

Niantic, developer of Pokemon Go, which was originally spun out of Google, published a blog in August-2021 building its vision for the metaverse around AR rather than VR. It views the world in science fiction novels such as Snow Craft and Ready Player One as a dystopian future of technology gone wrong where users need to escape a terrible real world with VR glasses to go into the virtual world. The company views VR as a sedentary process slipping into a virtual world and being cut off from everyone around you with an avatar as a poor substitute for the real human-to-human interaction. The company believes the VR glasses remove the realistic interactions from the real presence that you can sense being with people that are difficult to replicate staring into OLED display goggles.

Niantic is leaning into AR in order to be able to be outside and connect with the physical world with AR as an overlay to enhance those experiences and interactions, and get people back outside and active by learning about their city and community.

The company’s view of the metaverse is a world infused with “reality channels”, where data, information, services and interactive creations can be overlaid on the real world. The company incorporated these into its products Field Trip, Ingress and Pokemon Go as games that can make the world more interesting. The capability, though, can stretch beyond games and entertainment, as the AR can allow education, guidance, and assistance anywhere from work sites to knowledge work.

Niantic is also developing a visual positioning system (VPS) that can place virtual objects in a specific location so those objects can persist to be discovered by other people using the same application. With a live production code it has mapped thousands of locations. Niantic is attempting to build a much more in-depth digital map beyond Google Maps which can recognise location and orientation anywhere in the world leveraging on computer vision and deep-learning algorithms, and the leverage of the millions of users playing its games such as Pokemon Go.

The company’s vision follows Alan Kay’s 1972 Dynabook paper that discussed the trend of continuing to shrink compute (from mainframes now down to smartphones/wearables) and eventually to compute devices disappearing into the world. Niantic views shifting the primary compute surface from the smartphone to the AR glass to remove the demands on the hands to make it easier to access data and services, and view overlays on the real world. Niantic has partnered with Qualcomm to invest in a reference design for outdoor-capable AR glasses that can orient themselves using Niantic’s map, and render information and virtual worlds on top of the physical world with open platforms allowing many partners to distribute compatible glasses.

Niantic has released its Lightship developer platform to create AR experiences that could allow a “shared state” where everyone can see the same AR enhancements to the world. The Lightship platform has an ARDK development kit to allow creators and sponsors to build AR experiences that can take ad-vantage of the digital map to overlap virtual objects on the real world. With Occlusion APIs, virtual objects can appear in the right depth relative to real-world objects taking into account the location and contextual understanding of the surrounding spaces. With Semantic Segmentation APIs, computer vision can identify the environment areas such as ground, sky, water and buildings so virtual contact can react with the real space. The multiplayer APIs also allow developers to support AR sessions supporting up to five players concurrently. Some use cases it views include AR overlays such as arrows directing users through a train station, menus appearing outside a restaurant or a reservation UI outside a hotel.

"With Semantic Segmentation APIs, computer vision can identify the environment areas such as ground, sky, water and buildings so virtual contact can react with the real space”

| INDUSTRY COMMENTATORS’ VIEWS

As mentioned earlier, different companies and participants interpret what metaverse means differently. Having provided different views from a range of leading corporates, we also give views from some prominent industry commentators, one a venture capitalist and another an entrepreneur, regarding what a metaverse entails.

A) A venture capitalist’s definition of the metaverse and its development vectors.

Venture capitalist (VC) Matthew Ball defined (here and here) the metaverse as a “massively scaled and interoperable network of real-time rendered 3D virtual worlds which can be experienced synchronously and persistently by an effectively unlimited number of users with an individual sense of presence and with continuity of data, such as identity, history, entitlements, objects, communications and payments”.

The Metaverse: And How It Will Revolutionize Everything

From the leading theorist of the Metaverse comes the definitive account of the next internet: what the Metaverse is, what it will...

The metaverse, in his view, should be viewed as a quasi-successor state to the mobile internet as it would not replace the internet but will build on it and transform it just as mobile devices changed the access, companies, products/services and usage of the internet. As with mobile internet, the metaverse is a network of interconnected experiences and applications, devices and products, and tools and infrastructure. The metaverse places everyone in an embodied, virtual or 3D version of the internet on a nearly unending basis.

Some characteristics of the metaverse are that it would be: (1) persistent; (2) synchronous and live; (3) without caps on users and providing each user with an individual sense of presence; (4) a fully functioning economy; (5) an experience that spans digital and physical worlds, private and public networks, and open and closed platforms; (6) offer unprecedented interoperability of data, digital items/assets and content; and (7) populated by content and experiences created and operated by an incredibly wide range of contributors.

| THE VC IS TRACKING THE METAVERSE AROUND EIGHT CORE CATEGORIES

1 Hardware.

Technologies and devices to access, interact and develop the metaverse (VR, phones, haptic gloves).

2 Networking.

Development of persistent real-time connections, high bandwidth and decentralised data transmission.

3 Compute.

Enablement of compute to handle the demanding functions (physics, rendering, data reconciliation and synchronisation, Al, projection, motion capture and translation).

4 Virtual platforms.

Creation of immersive and 3D environments/worlds to stimulate a wide variety of experiences and activity supported by a large developer and content creator ecosystem.

5 Interchange tools and standards.

Tools, protocols, services and engines to enable the creation, operation and improvements to the metaverse spanning rendering, Al, asset formats, compatibility, updating, tooling and information management.

6 Payments.

Support of digital payments including fiat on-ramps to pure-play digital currencies/crypto.

7 Metaverse content, services, and assets.

The design, creation, storage and protection of digital assets such as virtual goods and currencies connected to user data and identity.

8 User behaviour.

Changes in consumer and business behaviour (spending and investment, time and attention, decision making and capability) associated with the metaverse.

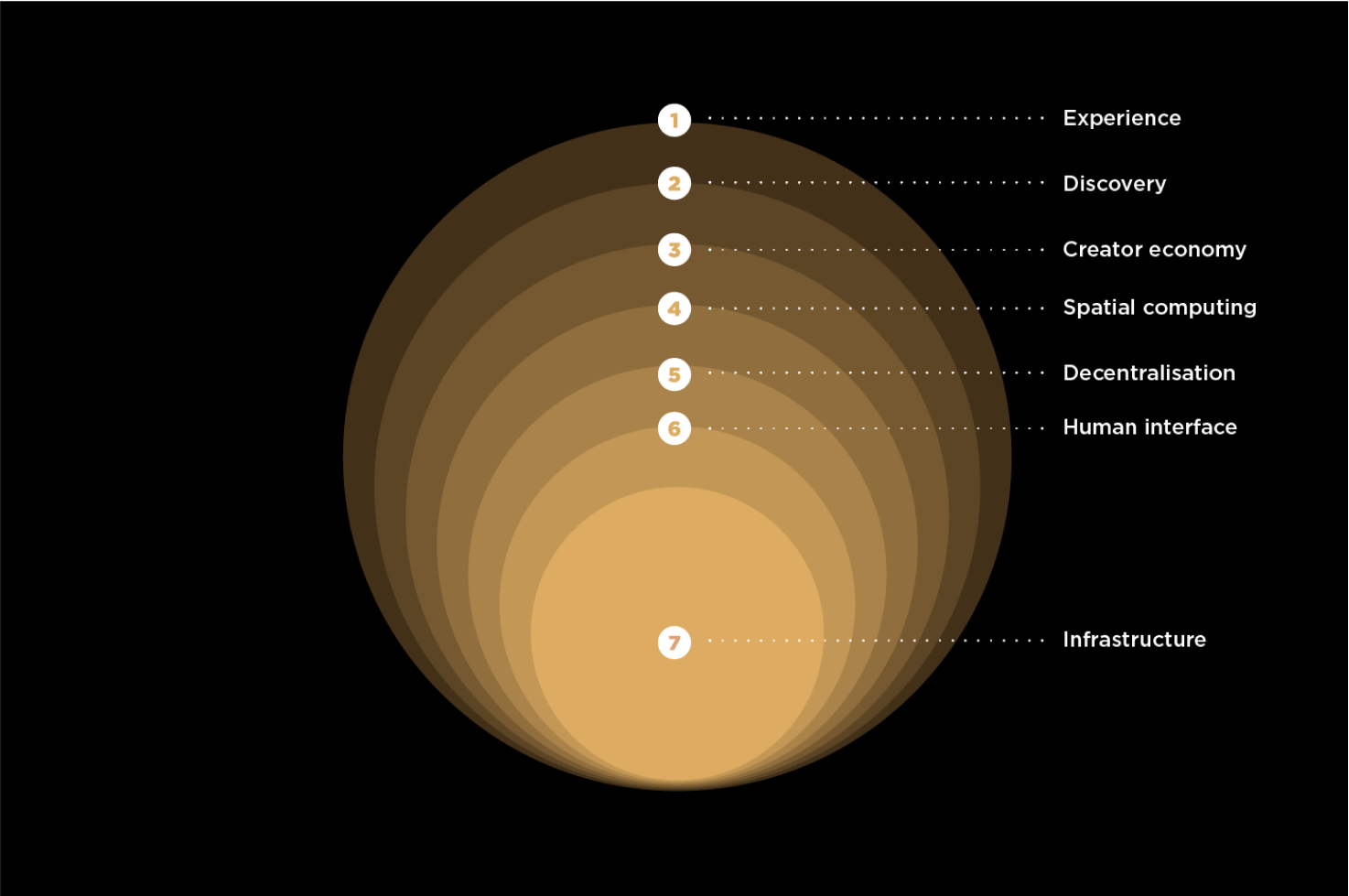

| AN ENTREPRENEUR’S VIEW: THE SEVEN LAYERS OF THE METAVERSE

Jon Radoff, CEO of Beamable, a Live Game Services platform, is another prominent industry commentator on the topic of the metaverse and is widely quoted by various articles associated with the concept. His prior work has focussed on online communities, intemet media and computer games. Jon sees the metaverse as composed of seven layers:

1 Experience.

The experience layer is where users do things in the metaverse including gaming, socialising, shopping, watching a concert or collaborating with co-workers. The metaverse experiences do not need to be 3D or 2D, or even necessarily graphical; it is about the inexorable dematerialisation of physical space, distance and objects. When physical space is dematerialised, formerly scarce experiences may become abundant.

2 Discovery.

The discovery layer is about the push and pull that introduces people to new experiences. Broadly speaking, most discovery systems can be classified as either inbound (the person is actively seeking information about an experience) or outbound (marketing that was not specifically requested by the person, even if they opted in). The discovery layer could include the curated portals, online agents, rating systems and advertising networks drawing users to discover different areas.

3 Creator economy.

Not only are the experiences of the metaverse becoming increasingly immersive, social, and real-time, but the number of creators who craft them is increasing exponentially. This layer contains all of the technology that creators use daily to craft the experiences that people enjoy.

4 Spatial computing.

Spatial computing has exploded into a large category of technology that enables us to enter into and manipulate 3D spaces, and to augment the real world with more information and experience. The key aspects of such software includes: 3D engines such as Unity and Unreal Engine to display geometry and animation; geospatial mapping; voice and gesture recognition; data integration from devices and biometrics from people; and next-generation user interfaces.

5 Decentralisation.

The ideal structure of the metaverse is full decentralisation. Experimentation and growth increase dramatically when options are maximised, and systems are interoperable and built within competitive markets. Distributed computing powered by cloud servers and microservices provide a scalable ecosystem for developers to tap into online capabilities without needing to focus on building or integrating back-end capabilities. Blockchain technology, which enables value-exchange between software, self-sovereign identity and new ways of unbundling and bundling content and currencies, is a large part of decentralisation (this area of innovation can be called Web 3.o).

6 Human interface.

Computer devices are moving closer to our bodies, transforming us into cyborgs. Smartphones have evolved significantly from their early days and are now highly portable, always-connected powerful “computers”. With further miniaturisation, the right sensors, embedded Al technology and low-latency access to powerful edge computing systems, they will absorb more and more applications and experiences from the metaverse. Dedicated AR/VR hardware (Oculus Quest and smartglasses) is also coming into the market, and in the coming years will likely evolve significantly. Beyond smartglasses, there is a growing industry experimenting with new ways to bring us closer to our machines such as 3D-printed wearables integrated into fashion and clothing.

7 Infrastructure.

The infrastructure layer includes the technology that enables our devices, connects them to the network and delivers content. This includes the semiconductors, battery technology, cloud servers and storage, and 5G and Wi-Fi transmission required. The infrastructure upgrades on compute, connectivity and storage supplemented by Al should dramatically improve bandwidth while reducing net-work contention and latency, with a path to 6G in order to increase speeds by yet another order of magnitude.

| WEB 3.O ENVISIONS A MORE DECENTRALISED METAVERSE

Web 3.o envisions the internet to be based on decentralised blockchains using token-based economics for transactions. The new vision contrasts with Web 2.0 where the large internet platform companies have centralised a lot of the data and content. Web 3.o was coined in 2014 by Ethereum co-founder Gavin Wood and in the past decade has seen more interest as a concept across tech companies, VCs, start-ups and blockchain advocates. A number of virtual communities in the metaverse are forming with a decentralised concept that may open up the rule making of the community to a collective majority of individuals on the platform and are also adopting the token concept as virtual currency.

While the original “internet” Web1 was built on largely open-source standards, Web 2.0 leveraged those same open and standards-based technologies but ended up creating large and closed communities, often referred to as “walled-garden” ecosystems (Facebook/Meta and YouTube, for example). As Jon Radoff has argued in one of his posts, walled gardens are successful because they can make things easy to do—and offer access to very large audiences. But walled gardens are permissioned environments that regulate what you can do, and extract high rents in exchange. He argues that there are three key features of Web 3.o that should change this paradigm of Web 2.0:

Value-exchange (rather than simply information exchange)

The enabling technology for value-exchange is smart contracts on blockchains. The blockchain is a shared ledger that allows companies, applications, governments and communities to programmatically and transparently exchange value (assets, currencies and property, etc.) with each other, without requiring custodians, brokers or intermediaries. The ability to programmatically exchange value between parties is a hugely transformative development.

Self-sovereignty

An important part of Web 3.o is inverting the current model where one uses one’s login details for “walled gardens” (such as Facebook or Google) to interact with several other online applications. Instead of having a company own one’s identity and then granting us access to other applications, one would own one’s own identity and choose which applications to interact with. This can be accomplished by using certain digital wallets such as Metamask (for Ethereum and ETH-compatible blockchains) or Phantom (used on the Solana blockchain). One’s wallet becomes one’s identity, which can then allow you to use various decentralised applications on the internet that need to interact with one’s currencies and property.

The re-decentralisation of the internet

Currently, there are substantial dependencies across the internet on a small number of highly centralised applications. But with Web3, the power shifts back to individual users, creators and application developers with far fewer centralised authorities to extract rents or ask permission from. This transfer of power and ability for users to monetise their work by certifying efforts on the blockchain and monetising that by exchanging the work for tokens is expected to lead to an explosion of new creativity in the form of applications, algorithms, artwork, music, AI/robots, virtual worlds and metaverse experiences, with more of the rewards staying in the hands of the owners and creators.

| Metaverse still has its limitations and risk

We do see significant potential from the latest wave of investments to upgrade to a more immersive internet but also see some limitations and risks in the latest cycle which pose obstacles and may limit its success.

AR/VR limitations

A truly immersive intemet would benefit from a 36o-degree field of VR or with AR glasses versus access through traditional smartphones, tablets and PCs. VR saw a first wave of hype in 2016 with the launch of Oculus Rift, HTC Vive, PlayStation VR and many smartphone-based VR platforms, with every major trade show seeing long lines to experience the concept.

The first wave failed to live up to the hype with only 2 mn units shipped. A combination of early hardware limitations included tethering to a PC, causing vertigo and discomfort with extended use, a lack of AAA gaming titles and content, and isolation from others while wearing it. The VR technology is improving with better processing and sensors, faster refresh rates, higher resolution and high-speed WiFi eliminating the tethering plus content should improve with the new wave of metaverse funding. Nevertheless, VR would still lead to some discomfort from wearing for an extended time and isolating the user from their surroundings

"The VR technology is improving with better processing and sensors, faster refresh rates, higher resolution and high-speed WiFi eliminating the tethering plus content should improve with the new wave of metaverse funding"

As an AR device, Google Glass also never made it past the demo stage, with the camera near the eyes raising concerns about others’ privacy. The newer models are now keeping the camera more discrete or eliminating it outright, but the space and power constraints also require a substantial improvement in the compute, battery and size of electronics.

Mainstream interest in virtual worlds

The hurdle for virtual worlds is higher as earlier communities faced difficulty keeping up activity 24-7 and it also needs to change user behaviour from still seeking out real-world experiences. The virtual worlds are improving in terms of audio/visual but still fall short on three of the five senses (smell, taste and touch) to be fully immersed in the experience. Some advocates such as Niantic are more aggressively investing in AR technologies which bring elements of the digital world into the real world.

Policing the communities

Social media platforms have continuously faced issues over which content and authors to allow and censure, and also their ability to use Al and human monitors to take down abusive content. A decentralised metaverse, without the scale of the resources major intemet providers have, may also struggle to keep up with monitoring abuse on the platform.

Dionysus GG small shoulder bag

Shop at the official site of Gucci. Discover the latest ready-to-wear, handbags, shoes and accessory collections, all inspired...

NFT speculation, fakes and metaverse asset inflation

The NFT represents a unique piece of data on the blockchain that claims to offer a certificate of authenticity or proof of ownership though they do not restrict sharing or copying the digital file or prevent the creation of NFTs with identical associated files. NFTs have been associated with transfers of artwork, ingame assets, music and sports cards, and can be a way to pay a creator for their work. The NFT market, though, is introducing stolen goods, bubbles and the risk of over-saturation as more are created. The metaverse is also drawing headlines for rising real estate prices in some of the digital communities and the high-priced resale of Gucci handbags carrying no right to carry it into the physical world.

Credit Suisse

Global investment bank and financial services firm, based in Switzerland.

credit-suisse.com